CoreWeave Stock Breakdown

Get smarter on investing, business, and personal finance in 5 minutes.

Stock Breakdown

Welcome to the second edition of Five Minute Money! Unfortunately, I think we have already failed as this post probably take 10 minutes to read…

CoreWeave is one of the most interesting stocks of the AI trade.

It took only 5 years to go from a $33 million dollar private market valuation in 2019 to just shy of a $100 billion valuation earlier this year…

And it has some of the most parabolic revenue growth of any business ever.

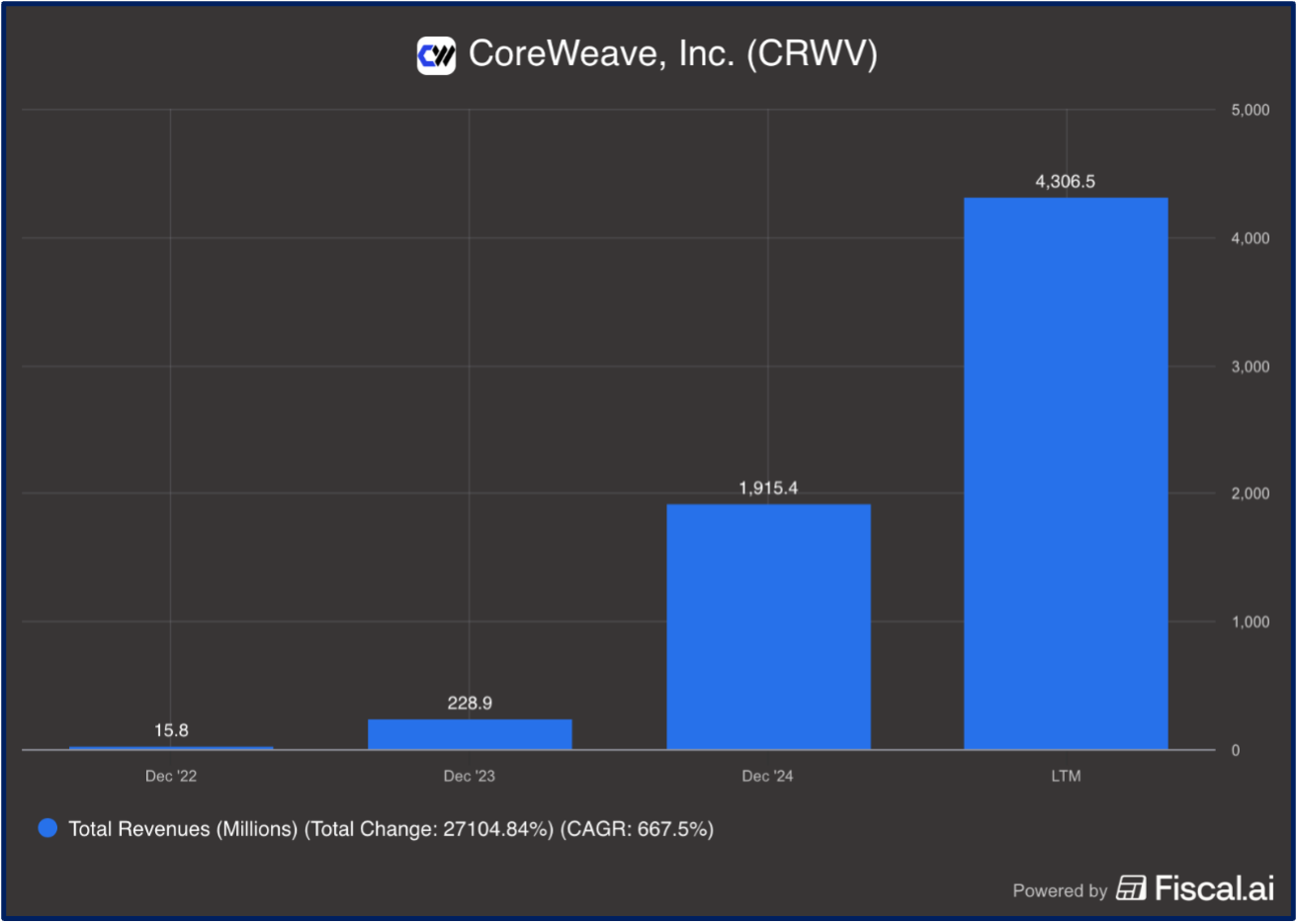

In 2022, they had just $15 million in revenues.

Hardly 3 years later, they are generating over $4.3 billion.

That is a 286x increase in revenues.

And their revenue backlog is a whopping $55 billion.

Now, if you’ve heard of CoreWeave before, you probably have some suspicions about the company.

Perhaps you’ve heard about circular financing arrangements with an investor who is also a supplier… and a customer (Nvidia)?

Or heard about their unhinged embrace of debt collateralized on chips that have dubious longevity (they carry over $14 billion of debt, not including lease obligations).

But even if you haven’t heard of them… well you probably also have some suspicions just given their crazy growth.

How sustainable of a company is it if all of that revenue growth came in a mere couple years?

And how could they possible do something that the large public cloud providers like Amazon, Google, and Microsoft couldn’t do?

Well at least some investors seemed to be very sure there was something special to CoreWeave, sending the stock from $40 at IPO to $183 at peak.

Now investors seem less sure…

So does CoreWeave actually have any defensible moat, or are they going to be written about in a few years as a clear example of investors’ irrational exuberance during an AI Bubble?

The Set Up.

CoreWeave started as a crypto mining company that purchased GPUs and used them to mine Ethereum.

After the price of Ethereum dropped 90% in 2018, they decided to pivot. They would rent out their GPUs to whoever wanted. Initially demand wasn’t that high.

However, a few years later, AI workloads started to boom. Lucky for CoreWeave, AI tended to run the best on GPUs.

Also lucky for CoreWeave, there was more demand for GPU-enabled workloads then there were GPUs…

As one of the only GPU-native cloud providers, they had inadvertently gotten themselves into a very fortuitous position.

The Business.

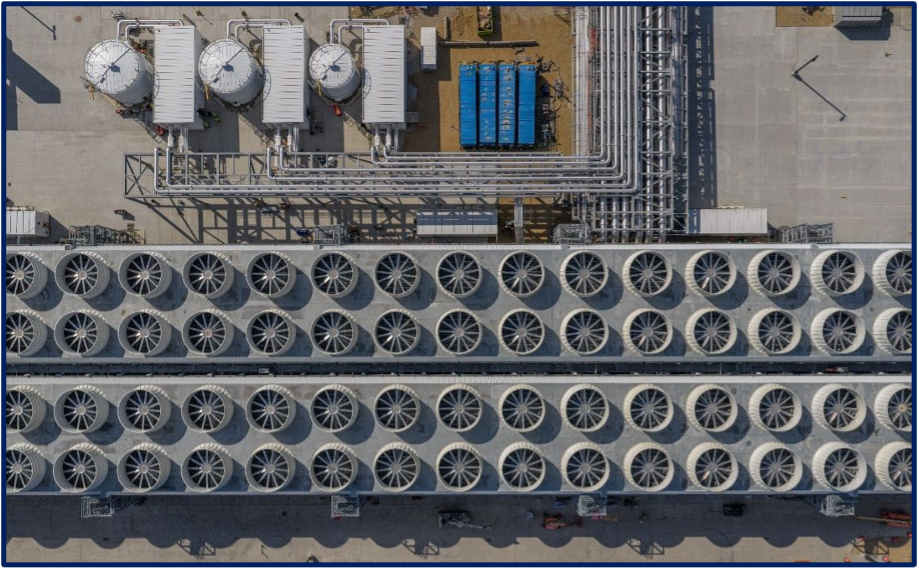

The business is pretty straight forward. They build data centers and fill them full of GPU chips to rent out to customers with 2 to 5 years contracts on a “take-or-pay basis”.

Take-or-pay means that the customermust pay them regardless of their usage of their service.

This is a key difference versus the way hyperscale’s typically work where customers only pay for their usage, or “on demand” as it’s called.

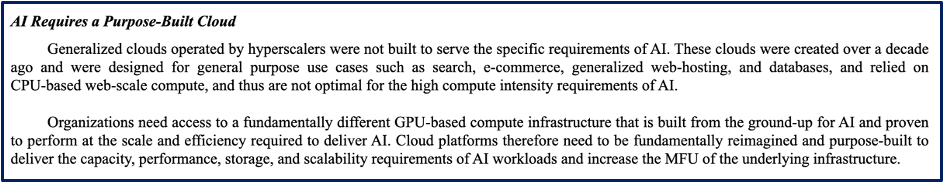

This is what makes hyperscalers so attractive in the first place to customers: they can instantly size up and size down their compute loads in accordance with their needs. As a result of this though, the hyperscalers need their architectures to be very flexible so they can service this large variety of different customer needs.

In contrast, CoreWeave contractually locks a customer into buying compute whether or not they use it. This means they don’t need to worry about flexibility. Instead, they can focus on performance.

This simple business model change, plus having a lot of GPUs (and power) in a capacity constrained market is why they have been so successful.

In fact, their contractual revenue backlog stands at an incredible $55 billion.

Is there anything unique about what they do?

Let’s break down some jargon.

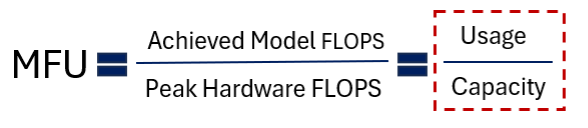

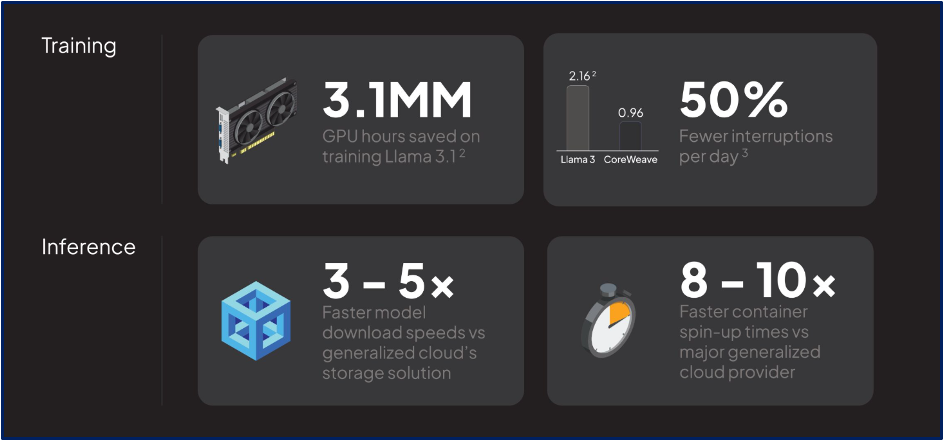

There is something called MFU, which stands for Model FLOPs Utilization.

Forget the industry verbiage. MFU is basically a measure of efficiency.

How much compute can actually be used versus the theoretical capacity?

Most models may get somewhere around 25-45% MFU.

CoreWeave can improve this by 10-20%.

That sounds pretty impressive… and also surprising, right?

How can they have better performance than Amazon, Google, and Microsoft?

It simply comes back to the business model and the legacy architectures of the hyperscalers. They are focused on flexibility while CoreWeave was focused on efficiency.

CoreWeave happened to be a GPU-native cloud, and together with their take-or-pay model, they are well positioned to offer customers a product geared towards max efficiency.

(If you like making things overcomplicated with fancy words, we can say that Hyperscale’s historically used “Hypervisors in Virtualized environments” which means the infrastructure isn’t actually split up for an individual customers’ workload. Instead, customers share the same infrastructure stack. In contrast, CoreWeave sets up “Containerized Environments”, where customers can access that compute load directly without sharing with other workloads. This also explains why the customer must pay for the compute regardless of whether they use it.)

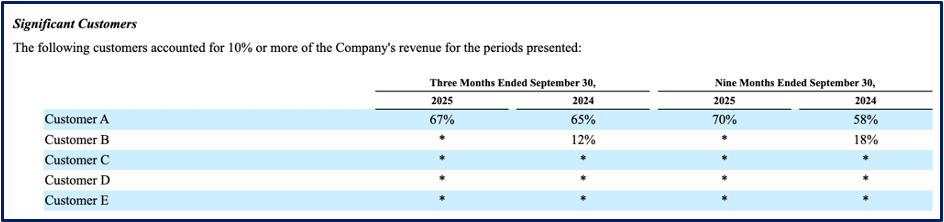

Microsoft is in fact, CoreWeave’s largest customer, responsible for a whopping 70% of revenues. They also have large contracts with OpenAI and Meta for tens of billions, which haven’t ramped up yet.

Below is a chart with some numbers that show how much more efficient CoreWeave’s GPU-native architecture is for AI.

So of course, Amazon, Google, Meta, and Microsoft said “GG” to CoreWeave and CoreWeave is just going to continue to be the most efficient provider. That is that.

We hope you enjoyed reading this week’s…

Well no, of course not. But why does big tech need CoreWeave in the first place? Can’t Big Tech do exactly what CoreWeave does—but probably better because of their massive resources and more talented employee base?

The short answer is yes and they are.

All of the hyperscalers plus Meta and xAI are building massive GPU-native data centers that will be just as efficient if not more so.

But the other key part of the story is Nvidia.

Nvidia is of course the largest seller of GPU chips. But their biggest customers—Amazon, Google, Microsoft, and Meta are all trying to replace their GPU design with their own chips.

Nvidia of course wants their GPU chips to be dominant and is not too fond of big tech trying to replace them.

So, to not help potential competitors get stronger, they have supported CoreWeave since CoreWeave has no ambitions of creating their own GPU chips.

Nvidia is an investor and customer of CoreWeave (Nvidia has a small cloud service), but most important they are a supplier. And as a supplier they tend to give CoreWeave priority access. CoreWeave was the first cloud provider to deliver many of Nvidia’s latest chips, which helps CoreWeave keep a leading position in AI workloads.

So basically CoreWeave is around as long as Nvidia wants them to be. Which isn’t a great spot to be in, but Nvidia has every reason to keep CoreWeave around.

Okay, so sounds great. A $55 billion revenue backlog, Nvidia is going to prioritize selling chips to them, and even Microsoft, Meta and OpenAI are customers.

What could go wrong?

So Much…

Risks.

There are 5 key risks that could if not sink CoreWeave, at least cause a lot of pain.

1) The first is competition.

Hyperscalers are building GPU-native data centers and their efficiency could be better than CoreWeave’s as they have better talent and more resources. They are spending around $100 billion each. This is a lot of new capacity that will give customers an option to go elsewhere.

2) AI workload prices fall.

This can be because demand falls or capacity grows so much to compete on price.

Right now there is a lot of demand for AI workloads as all of these AI companies compete for a leading product.

At some point though, market share will stabilize and investments will be rationalized. When that happens, there is likely to be an excess of supply and prices will fall to meet demand.

3) Counterparty and concentration risk.

They have a lot of revenue concentrated with few customers and if any decided to not pay or have liquidity problems themselves, it could bankrupt CoreWeave because of their debt (risk 6).

Even Microsoft could try to renegotiate their contracts if the AI bubble pops.

4) Execution Risk.

It is hardto build enough data centers to support a $55 billion dollar backlog. They have already had some delays and more delays could result in lost revenue and customers looking elsewhere.

5) Nvidia GPUs lose dominance.

If Google’s TPU chips become a better option for AI, Coreweave is at a disadvantage because their entire stack is built on Nvidia GPUs.

6) Oh… and LEVERAGE.

They have over $22 billion in debt and their current interest expense is $1 billion annually.

LTM operating profit was just $150mn…

Without further financing, they have enough cash on hand for about 1.5 years.

An Old Business Model Reinvented.

CoreWeave isn’t the first to couple technology and financing. Long before CoreWeave, GPUs and AI, there was AerCap.

AerCap is a simple business. They buy aircraft from the manufacturers and lease them to airlines. In order to buy the planes in the first place, they take on a lot of debt.

The aircraft manufacturers want to sell planes and airlines don’t want to pay the upfront price. AerCap stands in between, very similarly to a specialized bank.

Planes also tend to be decent collateral because they depreciate fairly reliably. This means that if an airline goes out of business, they can sell the plane to pay down their debt (or re-lease it).

Essentially though, they are a bank. Their main value add is taking on financing risk that the airlines or manufacturers don’t want to take on.

CoreWeave is rather similar with their over $22 billion of debt and lease obligations.

In comparison though, AerCap has higher supplier and customer diversity, its collateral is subject to less write-off risk, and it’s profitable…

What does the market value this stream of earnings at?

10x.

That is not to say that CoreWeave should trade at the same multiple, but rather that is what the financing aspect of the business is worth… i.e. not that much.

The key question is whether CoreWeave does have a unique model that leaves them as a player when capacity (likely) overshoots current demand.

Even if you believe AI workload demand is only going to increase, there could still be an “air pocket” where AI investments are pulled back temporarily… and that could bankrupt them.

What happens to them in that scenario?

And will they have the cash flow to survive the potential cyclicality of AI demand fluctuating?

Nothing in this newsletter is investment advice nor should be construed as such. Contributors to the newsletter may own securities discussed. Furthermore, accounts contributors advise on may also have positions in companies discussed. Please see our full disclaimers here.